INFO4320 Physical Computing and Rapid Prototyping Final Project

Musical Dancing Robot

Overview

We designed a gesture-controlled tabletop robot that allows users to select music through hand movement and watch the robot dance expressively with coordinated body motion and facial emotion.

The goal was to explore how robots can communicate affect through synchronized movement, sound, and expression, translating music’s emotional tone into embodied performance.

Concept

The project began with the idea of a “musical companion”—a small robot that reacts playfully to its environment.

Our final prototype enables users to:

Select music by moving a hand over the robot’s arm sensor.

Observe a personalized dance as the robot interprets the rhythm and mood of the chosen track.

Read emotion from the robot’s face via an LCD display that mirrors musical energy (e.g., happy, calm, excited).

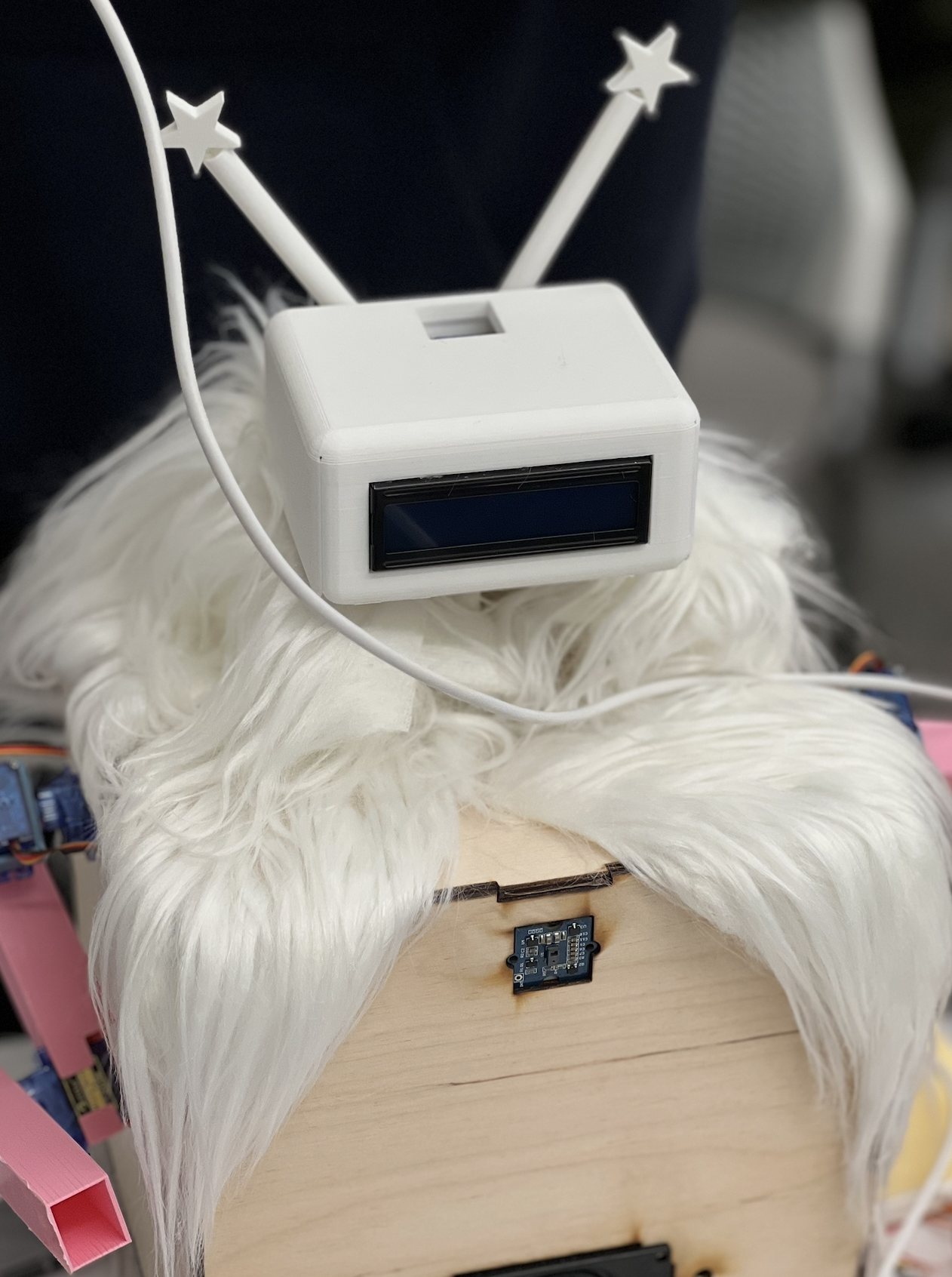

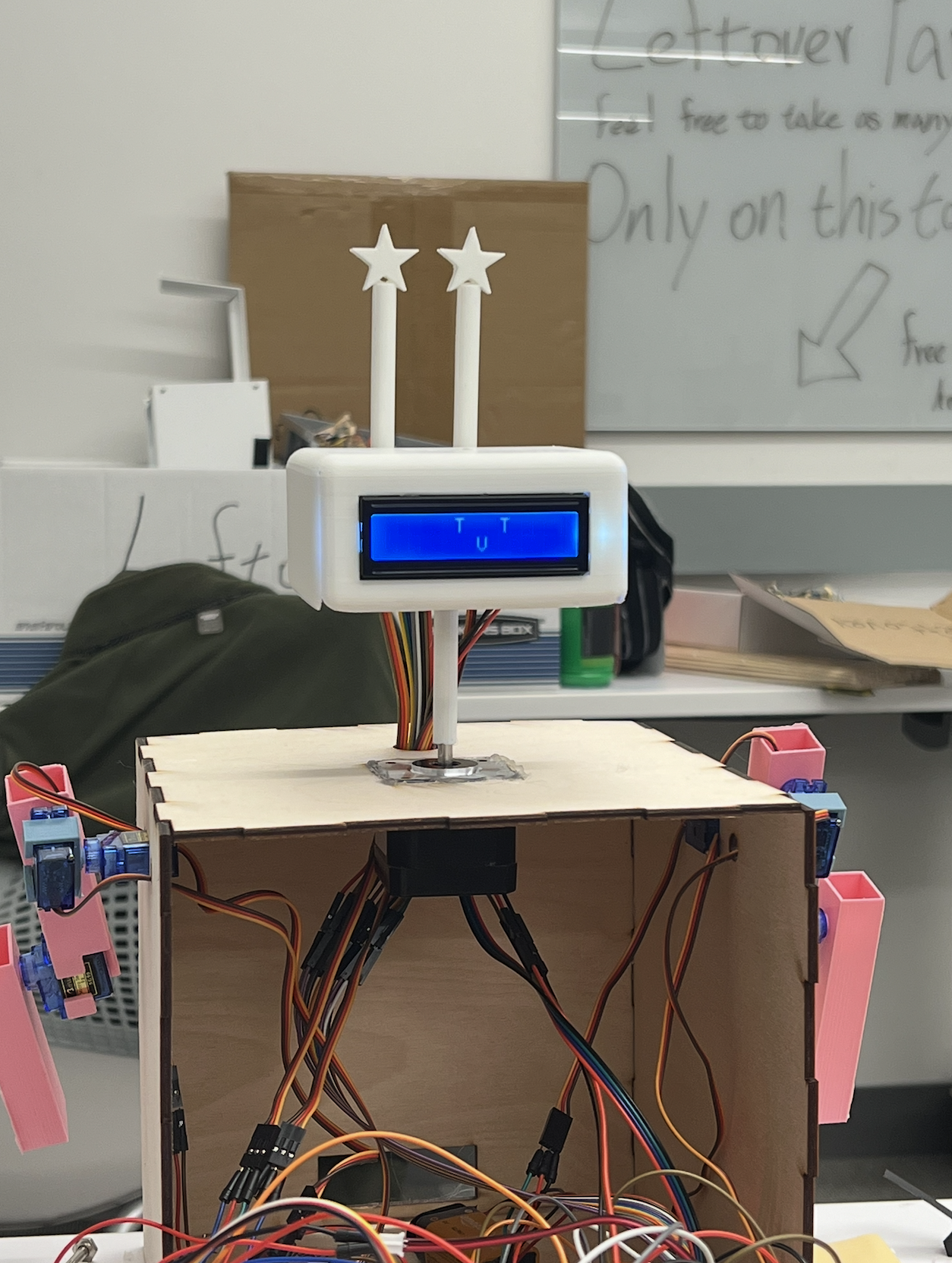

Hardware & Interaction Design

Core: ESP32 microcontroller

Sensors: Flex sensor embedded in the robot’s hand for gesture recognition

Actuators: Servo motors driving head, arms, and body movement

Display: LCD screen showing real-time facial expressions synchronized with music

Audio: Mini speaker module for on-board music playback

The design prioritizes simplicity and clarity of expression. Each song is tied to a unique choreography pattern, with different tempo and amplitude reflecting musical emotion.